The News: Yesterday, AWS CEO Andy Jassy delivered a near 3 hour long keynote revealing a slew of updates from Amazon Web Services at this year’s AWS reInvent. Click here to watch the replay.

Analyst Take: There is no way to unpack Andy’s entire keynote as there were so many announcements across compute, storage, networking, AI/ML, developer tools, software, and more. However, there were a handful of very big announcements that warrant an immediate bit of coverage and analysis. So let’s start our analysis there.

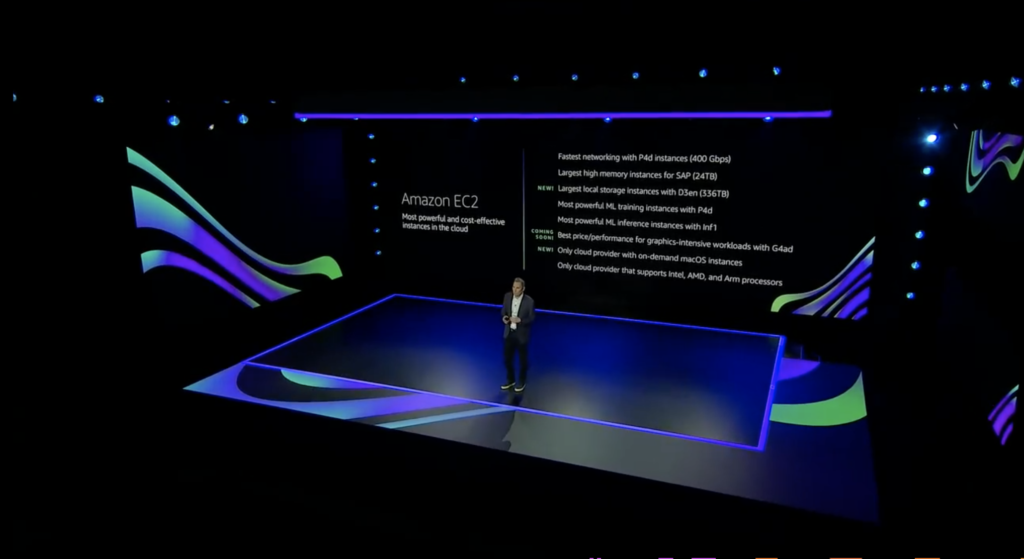

EC2 Updates

Overview: Amazon made a series of updates and new offerings in its EC2 portfolio including offerings for high performance graphics workloads (G4ad), network intensive workloads (c6gn), and memory-intensive applications with (R5B). Other launches included new macOS instances as well as updates to several instances. In short, there were a lot of updates. Here are a few key developments from Amazon’s release.

Coming Soon – Amazon EC2 G4ad Instances Featuring AMD GPUs for Graphics Workloads

Customers with high performance graphic workloads, such as those in game streaming, animation, and video rendering, are always looking for higher performance at less cost. Today, we announce that new Amazon Elastic Compute Cloud (EC2) instances in the G4 instance family will be available soon to improve performance and reduce cost for graphics-intensive workloads.

New EC2 C6gn Instances – 100 Gbps Networking with AWS Graviton2 Processors

Today, we’re expanding our broad Arm-based Graviton2 portfolio with C6gn instances that deliver up to 100 Gbps network bandwidth, up to 38 Gbps Amazon Elastic Block Store (EBS) bandwidth, up to 40% higher packet processing performance, and up to 40% better price/performance versus comparable current generation x86-based network optimized instances.

New – Amazon EC2 R5b Instances Provide 3x Higher EBS Performance

R5 instances are designed for memory-intensive applications such as high-performance databases, distributed web scale in-memory caches, in-memory databases, real time big data analytics, and other enterprise applications. Today, we announce the new R5b instance, which provides the best network-attached storage performance available on EC2.

Analysis: AWS continues to develop a comprehensive portfolio of Elastic Compute Cloud (EC2) instances to address the varying needs of customers. The diverse platforms give the company a wide breadth, and with the continued development of their Arm variants (Graviton2), the company continues to be more of a juggernaut in silicon. The macOS instances have gotten the most press, but the instances I shared above are a few of the instances that I expect to be heavily consumed as they become GA.

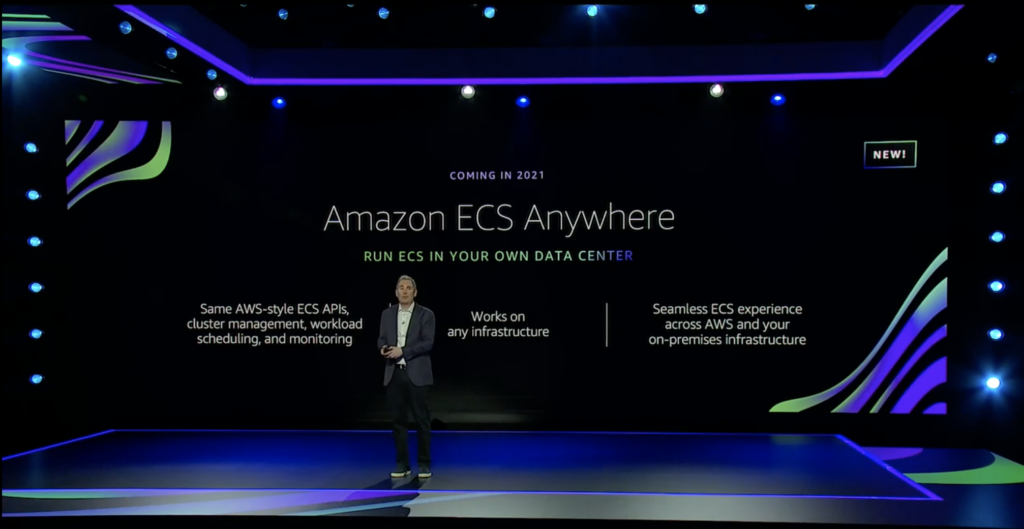

Containers Anywhere

Overview: Amazon announced multiple options for deploying containers on-premises with AWS. ECS Anywhere enables customers to run Amazon Elastic Container Services in their own data centers Amazon EKS Anywhere provides the ability to run Amazon Elastic Kubernetes Services in their own data centers.

Analysis: Amazon ECS has gained popularity due to the fact that customers see it as simple to use and deploy. However, a setback for AWS has been that often user deployment requirements may require deployments beyond AWS owned infrastructure and up to this point AWS hasn’t had an answer for this. What fans of ECS have sought is access to a single experience that allows them to achieve the flexibility that they need.

With Amazon ECS Anywhere (2021 GA), which builds upon Amazon ECS, customers will be able to deploy native Amazon ECS tasks in any environment. This includes traditional AWS managed infrastructure, as well as customer-managed infrastructure. But what makes this a very potent offering is that all of it can be run in a fully AWS managed control plane that’s running in the cloud, and always up to date.

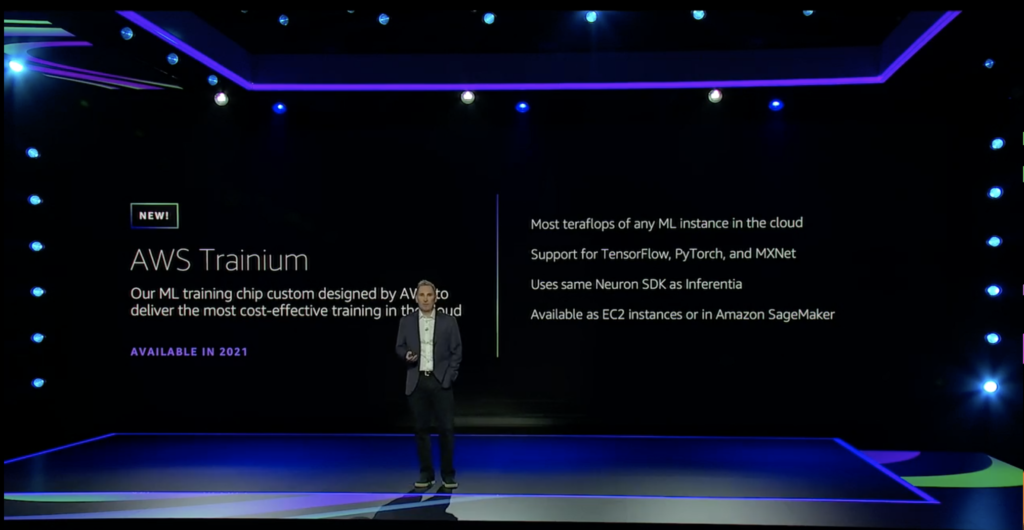

Trainium and the Silicon Show

Overview: AWS announced the launch of AWS Trainium, the company’s next-gen custom chip dedicated to training machine learning models.

Analysis: AWS continues to expand its silicon with Trainium, its newest custom chip designed to deliver highly cost-effective training in the cloud. While the company also announced instances based upon Intel’s Habana that were very intriguing, the Trainium offering immediately followed, acting as a very nice compliment to the company’s popular Inferentia chip for AI inference. The focus of the offering is that it has unparalleled capabilities to deliver training in the cloud. In fact, according to the company, Trainium can offer higher performance than any of its competitors in the cloud, with support for TensorFlow, PyTorch and MXNet. The goal of Trainium is to democratize cost and speed of training. According to AWS the numerical goal 30% higher throughput and 45% lower cost-per-inference compared to the standard AWS GPU instances.

Directionally, you can see AWS wants to have its own silicon wherever possible, but appears steadfast to continue to also offer variants from the likes of NVIDIA and Intel. I see this as a smart way to offer AI/ML training at scale, in the cloud, giving customers choice.

Babelfish Aurora PostgreSQL

Overview: Another big focus of Jassy’s keynote was finding a path to make it easier to migrate from SQL Server to Amazon Aurora. One new offering that was especially designed to simplify migrations is Babelfish for Aurora PostgreSQL.

Analysis: The reason this announcement is so powerful is in its simplicity and its implications. By going serverless (or not), users can mitigate a lot of costs, but this isn’t just about mitigation, but rather about seamless (near) transitions from SQL to Aurora. Babelfish enables PostgreSQL to understand both the command and protocol database requests from applications designed for Microsoft SQL Server without material impact to libraries, database schema, or SQL statements. This streamlines “migrations” with minimal developer effort. AWS touted that this tool is focused on “correctness,” which means that applications designed to use SQL Server functionality will behave the same on PostgreSQL as they would on SQL Server. This will increase AWS’ competitiveness in migrations from other instances of SQL, often with higher costs associated.

Note: An open source version of the offering is expected to be available in 2021.

Proton

Overview: AWS Proton provides a new service to automate container and serverless application development and deployment

Analysis: One of the biggest challenges I’ve heard regularly about containers is delivery at scale. The idea is good, but in practice it becomes quite complex and that has caused some enterprises to struggle or pause container deployments. AWS addressed that challenge with Proton, which allows platform teams to develop a “stack” that defines everything needed to provision, deploy, and monitor a service, including compute, networking, code pipeline, security, and monitoring. The company effectively built a service that is designed to help infrastructure teams manage microservices and update infrastructure without impacting developer productivity. I believe this solves a big problem and as AWS also moves its container capabilities on-prem as mentioned above, the portfolio for containers is expanding nicely to meet increasingly complex enterprise needs.

Futurum Research provides industry research and analysis. These columns are for educational purposes only and should not be considered in any way investment advice.

Read more analysis from Futurum Research:

Zoom Continues to Outperform Delivering a Strong FY Q3 Result

Salesforce Could Announce Slack Acquisition Next Week

Dell Delivers a Solid Quarter as Client and VMware Demand Grows

Image: HPE

The original version of this article was first published on Futurum Research.

Daniel Newman is the Principal Analyst of Futurum Research and the CEO of Broadsuite Media Group. Living his life at the intersection of people and technology, Daniel works with the world’s largest technology brands exploring Digital Transformation and how it is influencing the enterprise. From Big Data to IoT to Cloud Computing, Newman makes the connections between business, people and tech that are required for companies to benefit most from their technology projects, which leads to his ideas regularly being cited in CIO.Com, CIO Review and hundreds of other sites across the world. A 5x Best Selling Author including his most recent “Building Dragons: Digital Transformation in the Experience Economy,” Daniel is also a Forbes, Entrepreneur and Huffington Post Contributor. MBA and Graduate Adjunct Professor, Daniel Newman is a Chicago Native and his speaking takes him around the world each year as he shares his vision of the role technology will play in our future.