The News: Intel has unveiled two new processors as part of its Nervana Neural Network Processor (NNP) lineup with an aim to accelerate training and inferences drawn from artificial intelligence (AI) models.

Dubbed Spring Crest and Spring Hill, the company showcased the AI-focused chips for the first time on Tuesday at the Hot Chips Conference in Palo Alto, California, an annual tech symposium held every August. Intel’s Nervana NNP series is named after Nervana Systems, the company it acquired in 2016. The chips were designed at its Haifa facility in Israel, and allow for training AI and inferring from data to gain valuable insights.

Read the full story on TNW.

Analyst Take: The announcements of these two chips, one focused on AI Training and one on AI Inference shouldn’t come as a surprise. Intel’s Nervana acquisition served as the company’s entree into this space and with their focus on data centric, versus data center itself, advancing their capabilities in AI was a critical component. Given Intel’s significant lead in data center, the timing of this release is both important as I would have expected these chips perhaps a bit sooner. This isn’t to say Intel Xeon Scalable with DL Boost didn’t have significant capabilities related to AI, but having a specialized chip was something I believe the company needed to show their ambition to compete in both training and Inference.

Speaking of competition, Intel won’t be without it in this space. Recent advances in inference from NVIDIA has opened up the door to GPUs serving a more critical role in not just training, but inference. Additionally, companies like Amazon and Google have built specialized processors to enable inference from complex AI algorithms.

Intel is creating some important differentiation from at least Google, which has built a TPU that is only designed to work with TensorFlow. Intel, however, is more focused on their ecosystem with their new chips being able to not just work with TensorFlow, but with other Deep Learning Networks like those developed by Baidu and Facebook. Ecosystem has always been one of Intel’s strong suits as this has led to their strength and low attrition rates among datacenter customers utilizing their CPUs.

Furthermore, Intel is differentiating themselves and shifting from their traditional commitment to the CPU being the solution to everything by building custom ASICs (Application Specific Integrated Chips), like these two Nervana chips that have a dedicated purpose offering the highest level of efficiency when utilized for a specific purpose.

I believe that Intel is well positioned to play a critical role to the advancement of AI. While Amazon and Google will likely continue to build certain chips to support their own hyperscale and AI endeavors, Intel will continue to play a key role for on-premises as well as smaller cloud providers.

An Overview of the Chips: From Intel’s Hot Chips Press Release:

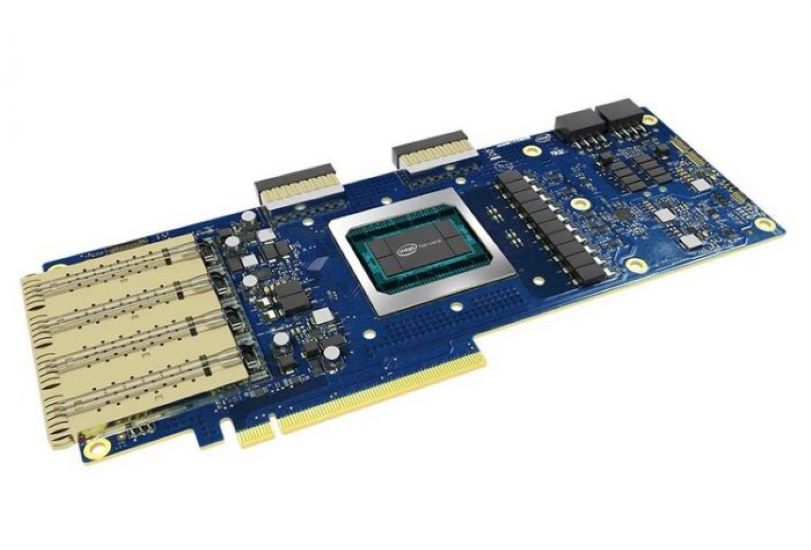

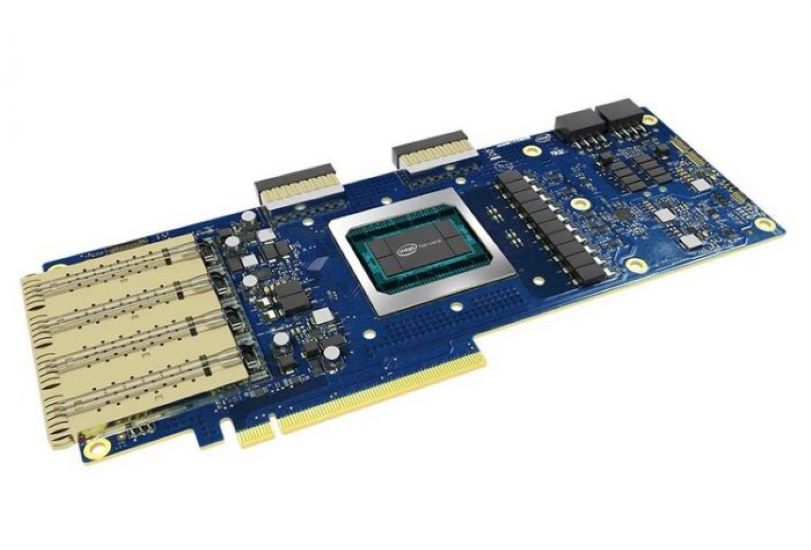

Intel Nervana NNP-T: Built from the ground up to train deep learning models at scale: Intel Nervana NNP-T (Neural Network Processor) pushes the boundaries of deep learning training. It is built to prioritize two key real-world considerations: training a network as fast as possible and doing it within a given power budget. This deep learning training processor is built with flexibility in mind, striking a balance among computing, communication and memory. While Intel® Xeon® Scalable processors bring AI-specific instructions and provide a great foundation for AI, the NNP-T is architected from scratch, building in features and requirements needed to solve for large models, without the overhead needed to support legacy technology. To account for future deep learning needs, the Intel Nervana NNP-T is built with flexibility and programmability so it can be tailored to accelerate a wide variety of workloads – both existing ones today and new ones that will emerge. View the presentation for additional technical detail into Intel Nervana NNP-T’s (code-named Spring Crest) capabilities and architecture.

Intel Nervana NNP-I: High-performing deep learning inference for major data center workloads: Intel Nervana NNP-I is purpose-built specifically for inference and is designed to accelerate deep learning deployment at scale, introducing specialized leading-edge deep learning acceleration while leveraging Intel’s 10nm process technology with Ice Lake cores to offer industry-leading performance per watt across all major datacenter workloads. Additionally, the Intel Nervana NNP-I offers a high degree of programmability without compromising performance or power efficiency. As AI becomes pervasive across every workload, having a dedicated inference accelerator that is easy to program, has short latencies, has fast code porting and includes support for all major deep learning frameworks allows companies to harness the full potential of their data as actionable insights. View the presentation for additional technical detail into Intel Nervana NNP-I’s (code-named Spring Hill) design and architecture.

Futurum Research provides industry research and analysis. These columns are for educational purposes only and should not be considered in any way investment advice.

Read more analysis from Futurum Research:

Qualcomm and LG Reach Important Licensing Terms As 5G Grows

RPA In The Real World: Driving Marketing, Analytics, Productivity and Security

NVIDIA Furthers PC Gaming Lead As Ray Tracing Takes Over At Gamescom

Image: Intel

The original version of this article was first published on Futurum Research.

Daniel Newman is the Principal Analyst of Futurum Research and the CEO of Broadsuite Media Group. Living his life at the intersection of people and technology, Daniel works with the world’s largest technology brands exploring Digital Transformation and how it is influencing the enterprise. From Big Data to IoT to Cloud Computing, Newman makes the connections between business, people and tech that are required for companies to benefit most from their technology projects, which leads to his ideas regularly being cited in CIO.Com, CIO Review and hundreds of other sites across the world. A 5x Best Selling Author including his most recent “Building Dragons: Digital Transformation in the Experience Economy,” Daniel is also a Forbes, Entrepreneur and Huffington Post Contributor. MBA and Graduate Adjunct Professor, Daniel Newman is a Chicago Native and his speaking takes him around the world each year as he shares his vision of the role technology will play in our future.